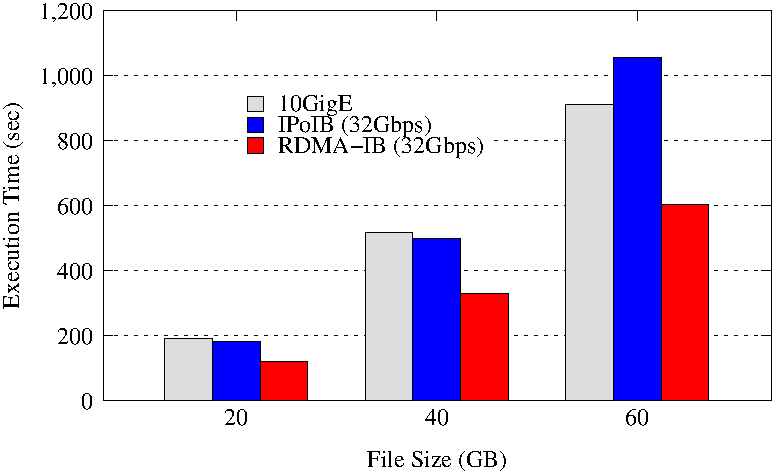

Sort Execution Time on HDD

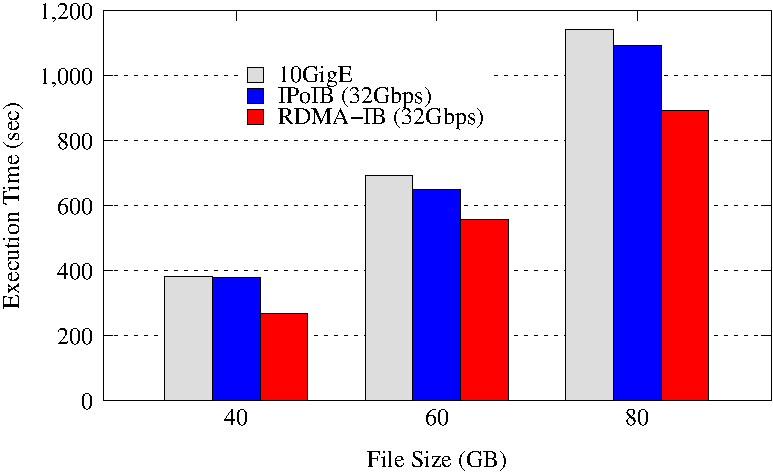

TeraSort Execution Time on HDD

Experimental Testbed: Each node of our testbed has two 4-core 2.53 GHz Intel Xeon E5630 (Westmere) processors and 24 GB main memory. The nodes support 16x PCI Express Gen2 interfaces and are equipped with Mellanox ConnectX QDR HCAs with PCI Express Gen2 interfaces. The operating system used was RedHat Enterprise Linux Server release 6.4 (Santiago).

These experiments are performed in 8 DataNodes with a total of 32 maps and 16 reduces. Each DataNode has a single 1TB HDD. HDFS block size is kept to 256 MB. Each TaskTracker launches 4 concurrent maps and 2 concurrent reduces. The NameNode runs in a different node of the Hadoop cluster and the benchmark is run in the NameNode.

The RDMA-IB design improves the job execution time of Sort by 34% - 43% and TeraSort by 18% - 29% compared to IPoIB (32Gbps). Compared to 10GigE, the improvement is 34% - 37% in Sort and 22% - 30% in TeraSort.

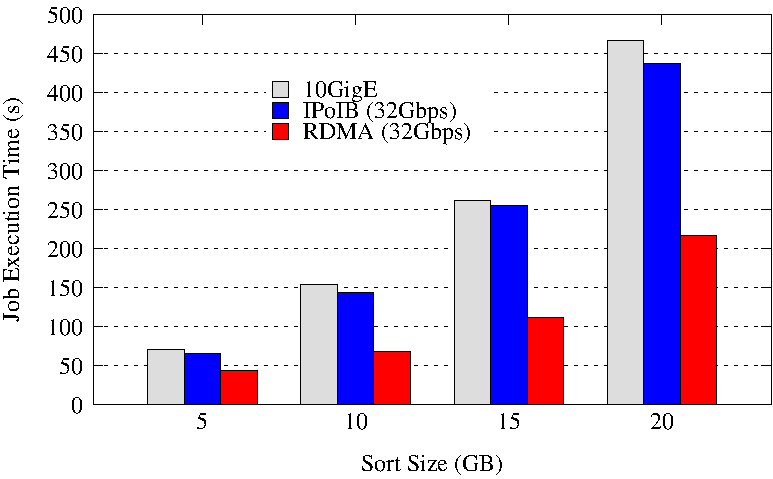

Sort Execution Time on SSD

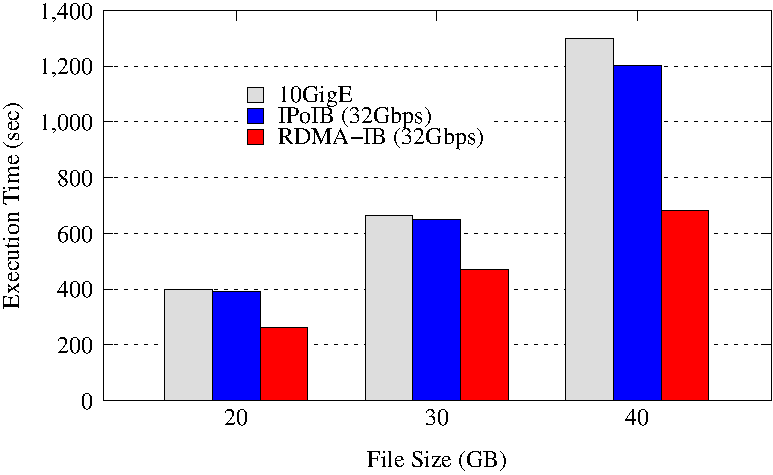

TeraSort Execution Time on SSD

Experimental Testbed: Each node of our testbed has two 4-core 2.53 GHz Intel Xeon E5630 (Westmere) processors and 24 GB main memory. The nodes support 16x PCI Express Gen2 interfaces and are equipped with Mellanox ConnectX QDR HCAs with PCI Express Gen2 interfaces. The operating system used was RedHat Enterprise Linux Server release 6.4 (Santiago).

These experiments are performed in 4 DataNodes with a total of 16 maps and 8 reduces. Each DataNode has a single 300GB OCZ VeloDrive PCIe SSD. HDFS block size is kept to 256 MB. Each TaskTracker launches 4 concurrent maps and 2 concurrent reduces. The NameNode runs in a different node of the Hadoop cluster and the benchmark is run in the NameNode.

The RDMA-IB design improves the job execution time of Sort by 33% - 46% and TeraSort by 28% - 44% compared to IPoIB (32Gbps). Compared to 10GigE, the improvement is 37% - 50% in Sort and 30% - 48% in TeraSort.

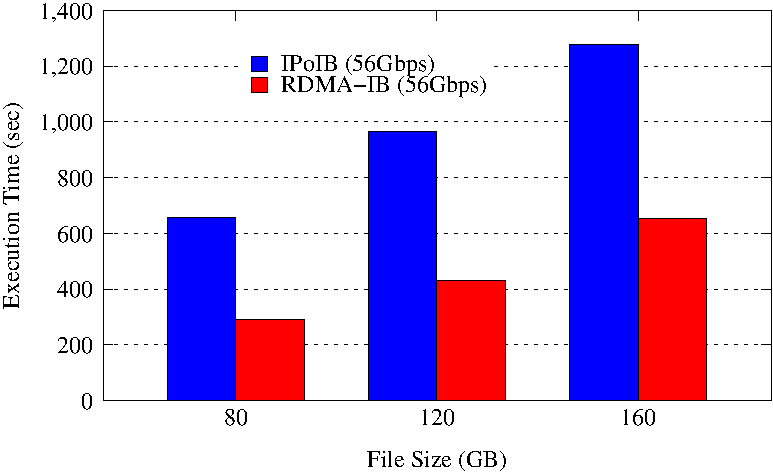

Sort Execution Time on HDD

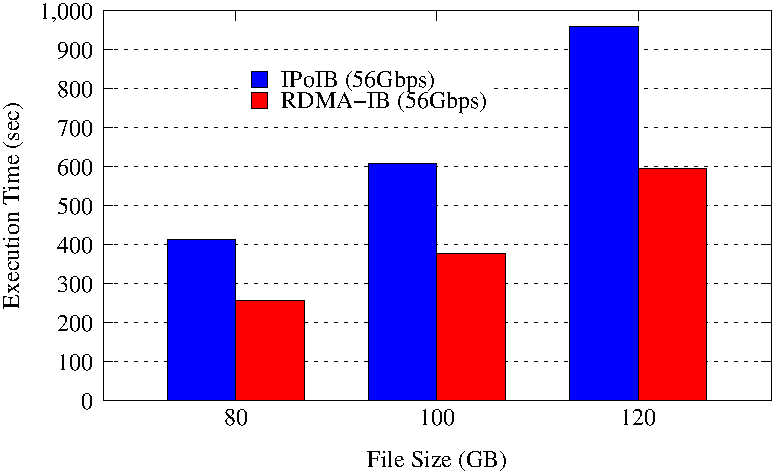

TeraSort Execution Time on HDD

Experimental Testbed: Each node of our testbed is dual-socket containing Intel Sandy Bridge (E5-2680) dual octa-core processors running at 2.70GHz. Each node has 32GB of main memory, a SE10P (B0-KNC) co-processor and a Mellanox IB FDR MT4099 HCA. The host processors run CentOS release 6.3 (Final).

The Sort experiments are performed in 32 DataNodes with a total of 128 maps and 57 reduces. Each DataNode has a single 80GB HDD. HDFS block size is kept to 256 MB. Each TaskTracker launches 4 concurrent maps and 2 concurrent reduces. The NameNode runs in a different node of the Hadoop cluster and the benchmark is run in the NameNode. The RDMA-IB design improves the job execution time of Sort by 49% - 56% compared to IPoIB (56Gbps).

The TeraSort experiments are performed in 16 DataNodes with a total of 64 maps and 32 reduces. The RDMA-IB design improves the job execution time of TeraSort by 38% compared to IPoIB (56Gbps).